Composer AI and building Productivity Apps

What is Composer AI?

Composer AI is a service lead engagement designed to accelerate the adoption of generative AI.

It’s a full-stack AI deployment for OpenShift that includes all the necessary components for a Generative AI solution, including required operators, datasources, LLMs, and a custom application for querying the LLM.

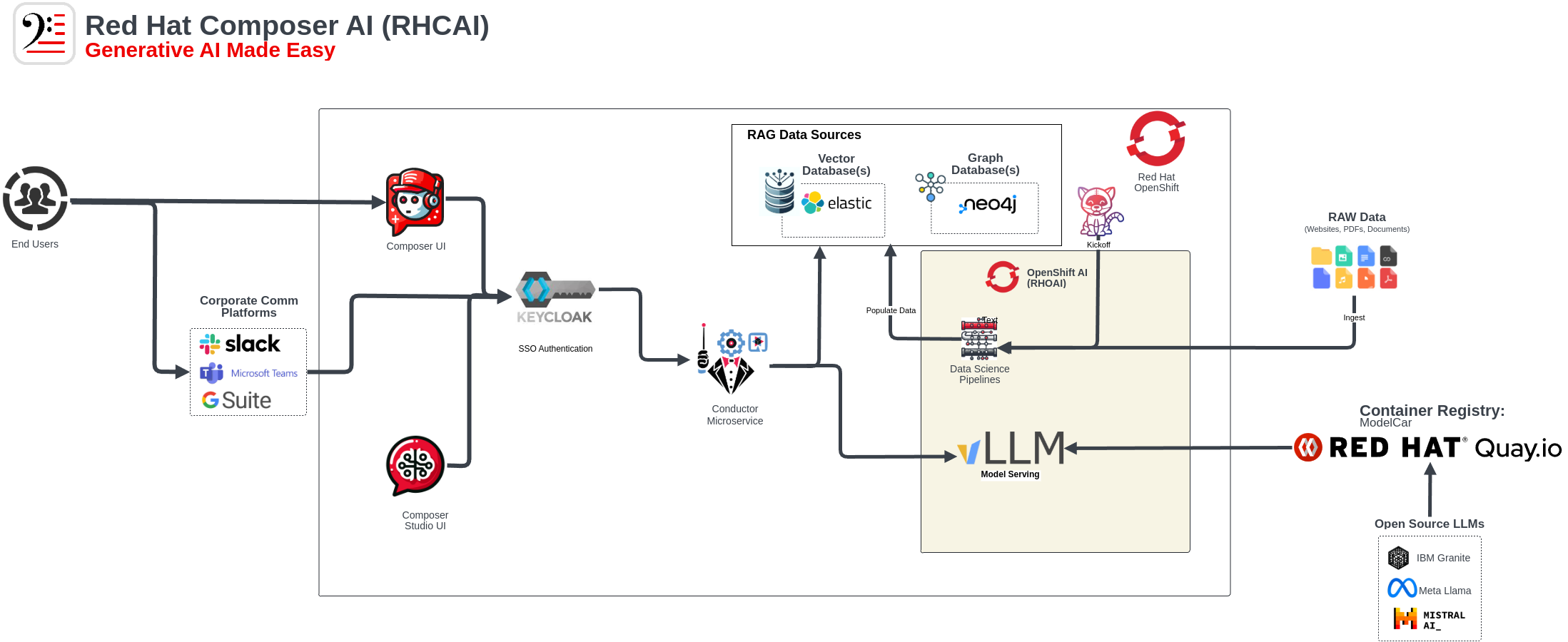

Architecture

The above is a high-level architecture diagram of what’s included in Composer AI. The major components are:

-

Cluster GitOps Repository- Contains all the required OpenShift components for Composer.

-

App of Apps Repository- Installs the Composer AI-specific components.

-

Chatbot UI- Patterfly User Interface for building and interacting with assistants

-

Conductor- Quarkus API that utilizes LangChain4J and the Quarkus Overlay for AI functionality.

-

Data Ingestion- KFP Pipeline that is automatically kicked off and can be used as an example for ingesting data.

-

LLM- vLLM serving runtime utilizing a Model Car containing a Granite Model.

-

Today’s Lab

As mentioned above, when installed using the default GitOps approach or retrieved through the demo.redhat.com catalog, your cluster will come with an instance of vLLM/Granite and an Elasticsearch Vector Database preinstalled.

However, for this lab, we will only be including the Conductor API and the Composer AI on the provisioned cluster. This allows you to install your own instance of vLLM using Red Hat OpenShift AI and an Elasticsearch Database. You will also create new assistants using Composer AI and ingest and use data from our Red Hat documentation to more mimic match what you may be doing in a customer environment.