(Optional) Try Your Own Document

This section guides you through creating your own website assistant.

Ingestion

We’ll use a Tekton pipeline called "Website Ingestor" to ingest data from any website

-

Run this pipeline with a URL pointing to your chosen website(s) and load the data into a specific index in Elastic.

-

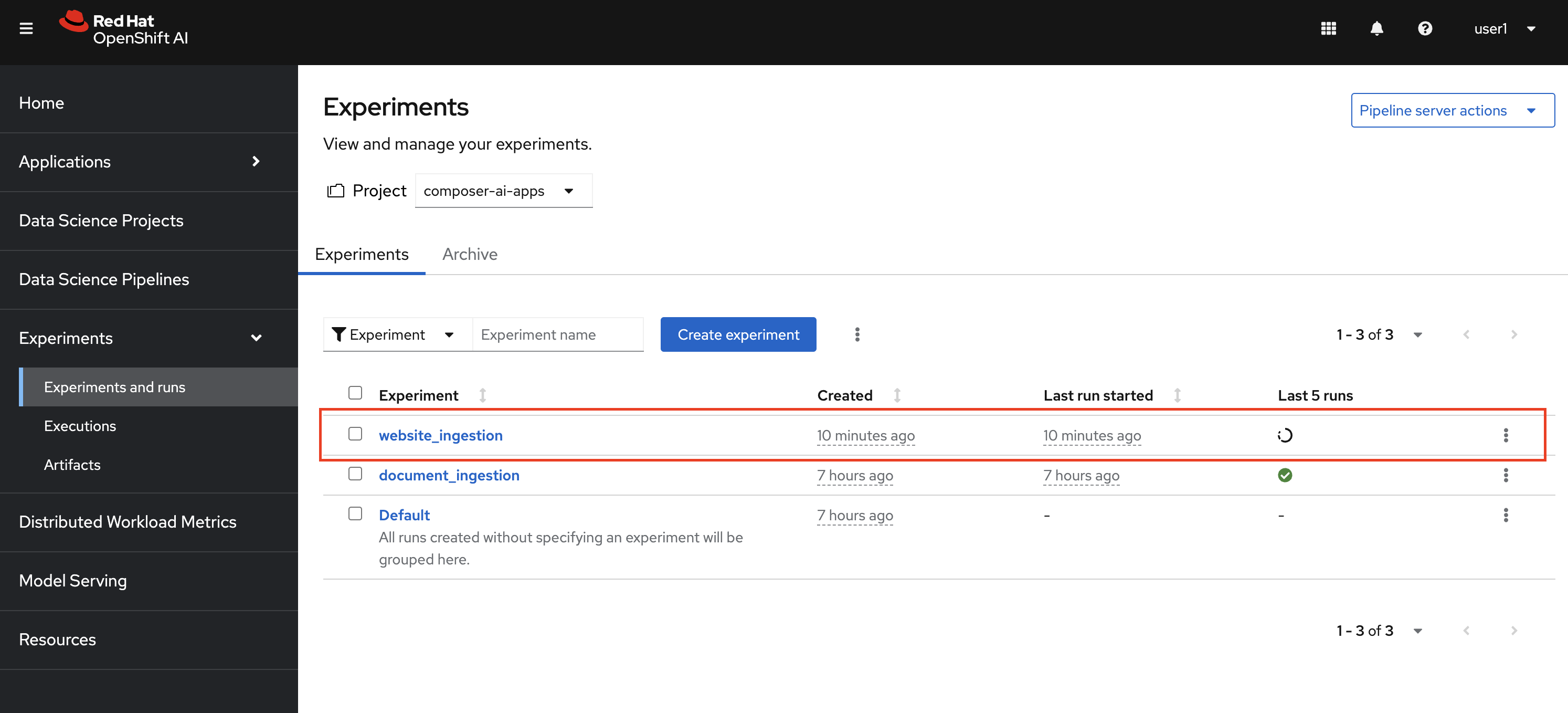

Move to RHOAI, and check under expriments section to see website ingestion expriment running.

-

Track the expriment and wait for it to completed successfully. Note: process and store task takes few mins to complete the ingestion, maybe get a coffee

|

Create Website Assistant

-

Create a Knowledge Source named "My Knowledge Source" using the Tekton Pipeline from the previous section, and pointing to your specified index. image::diy_create_new_KS.png[Create new Knowledge Source]

-

Move on to Chat Bot UI, and create a new Knowledge source based on the new vector index created in step 1. Note: please provide the db index in lowercase Also user host as http://elasticsearch-es-http:9200

-

Create an Assistant named "My Assistant" using "My Knowledge Source." image::diy_create_new_assistant.png[Create new Assistant]

-

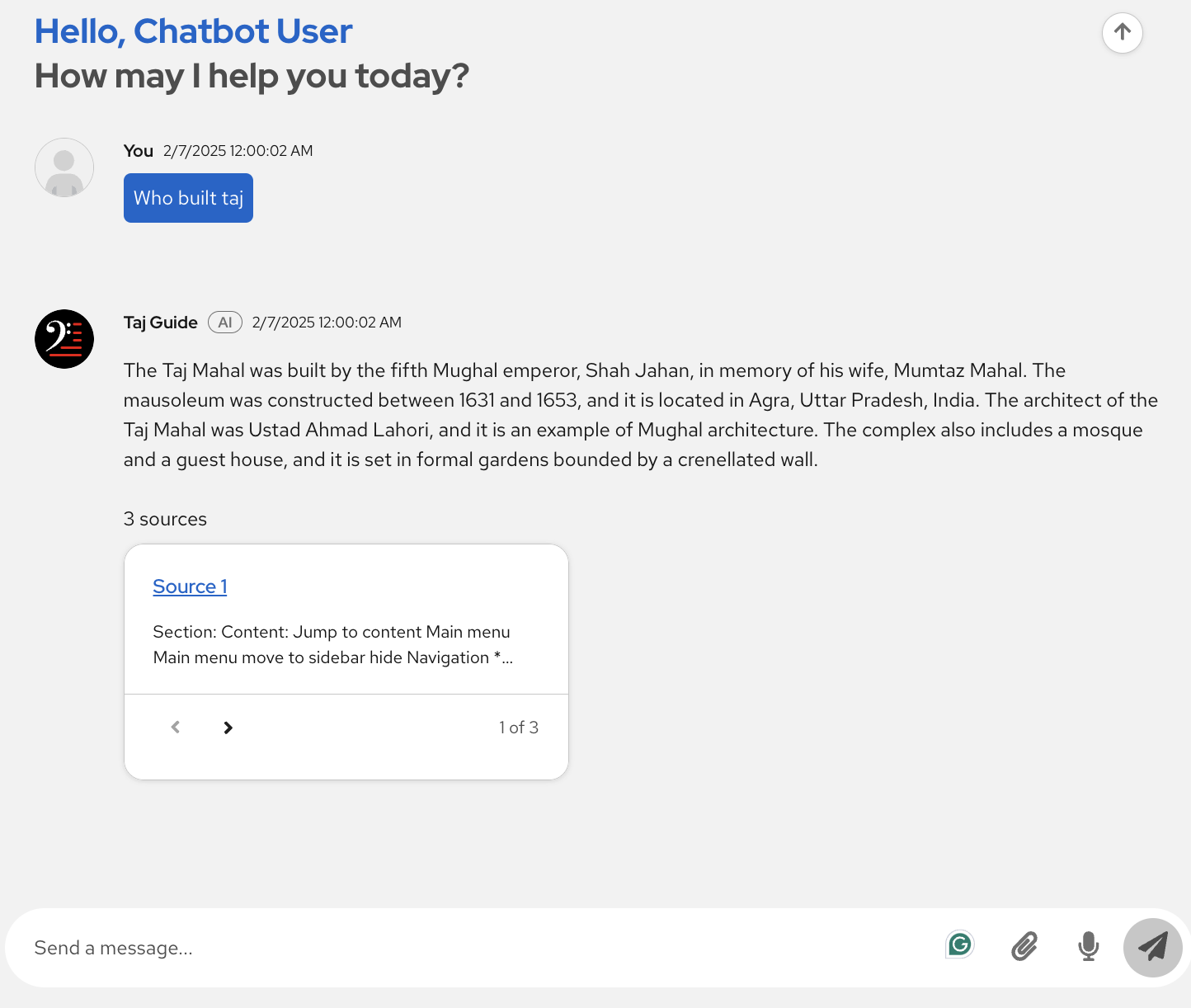

Have fun with the newly create chat bot

Testing Website Assistants

Ask your assistant questions related to the ingested content. Answers might not be perfect, especially for irrelevant questions. Here’s how to improve them:

Ingestion Improvements:

Our ingestion pipeline has minimal knowledge of the website being ingested, and correctly tuning our ingestion pipeline will give us better data to work with allowing us for better answers. Such as:

-

Chunking: Break down content into manageable pieces, experimenting with different sizes to find what works best for your LLM and data.

-

Overlap: Include some overlap between chunks to preserve context and relationships between sentences/ideas.

-

Focus: Prioritize the most relevant content, removing unnecessary elements like ads or navigation menus.

-

Clean: Eliminate duplicate information to improve efficiency and avoid skewed results.

Prompt Engineering:

As we saw in a previous example a well crafted prompt can make a huge difference in the quality of the answer you receive.

Assistant Tuning:

Explore LLM settings (like temperature) and advanced techniques like reranking.

| Assistant tuning features are under development. And we are always looking for people to help contribute to our project. |

File Upload:

A file can be uploaded with the upload button and will be automatically chunked and used as an in-memory data source similar to our Vector Database.